It’s time to give up the bundler!

I recently stopped bundling my JS in my Rails setup, and opted for a more straightforward approach leveraging ES Modules and importmaps to load and run Javascript in the browser. I’m convinced this is the way to go, and I wanted to write a brief explainer on ESM + importmaps and why you should think about ditching the bundler in your next project.

In this post, I want to cover briefly what ES Modules are, what they can replace, the historical reasons why we’ve used bundlers in the past, and why continuing to do so might be hurting your page performance.

What are Javascript Modules?

Broadly, a JS module is a way of lexically scoping code to be made selectively available in another module. Concretely, it’s just a file that declares exports to be available in another file.

There are two main module systems:

- CommonJS (which you’ll be familiar with if you’ve ever written Javascript for Node)

- ES Modules (now supported in the browser and used in Deno)

Here’s the same module written for both CJS and ESM:

const merge = require("lodash").merge;

function addPi(target) {

return merge(target, { pi: 3.14 });

}

module.exports = addPi;In this example, when this module is loaded, Node would go looking for lodash in our node_modules/ folder, and make your function, addPi, available as a default export to any other modules requiring it.

import _ from "https://esm.sh/lodash@4.17.21";

export default function addPi(target) {

return _.merge(target, { pi: 3.14 });

}Here, we’re able to specify that lodash should be imported from a remote URL, in this case the CDN esm.sh.

Browser support

CommonJS modules have never been supported in the browser, while ESM is now supported by Node the vast majority of browsers , and alternative JS runtimes like Deno and Bun .

What’s an importmap?

Importmaps are a way to tell the browser where to find Javascript (specifically, ES Modules) that are included via an import from another module.

You might have spotted the immediate problem with the ES Module above, which is one of dependency management.

Generally, when you’re using outside code, you want to do two things:

- Use specific versions of your dependencies, so that your code is deterministic.

- Use the same version of that dependency everywhere in your code that it’s used.

Without one source of truth for specifying dependency versions, your module resolutions could be scattered across dozens or even hundreds of files! Imagine the headache of updating any of them.

Importmaps are a way to solve both of these problems, by predeclaring where modules should be resolved from, and (if you’re using a good CDN), which version is resolved.

In this way, you can think of an importmap for the browser as serving the same function that package.json and yarn.lock files serve for in Node/CommonJS contexts.

Here’s the same script above rewritten for the browser, using importmaps.

<head>

<script type="importmap">

{

"imports": {

"lodash": "https://esm.sh/lodash@4.17.21"

}

}

</script>

<script type="module">

import _ from "lodash";

function addPi(target) {

return _.merge(target, { pi: 3.14 });

}

</script>

</head>What’s going on here?

In our html doc, we’ve declared two script tags, one with type="importmap", and one with type="module".

When the browser parses the second script tag, it will download and execute module.mjs.

As it begins to parse the second script tag, the first statement the interpreter will come across is an import of

lodash as _ from the named package, lodash.

Because lodash is not an absolute or relative import, it will refer to the importmap to resolve the module.

Having resolved lodash to https://esm.sh/lodash@4.17.21, it will fetch the module, and continue executing module.mjs.

Browser support

Importmaps are supported by only 85% of browsers, but there’s a nice polyfill that you can use to bump that up into the 90s if you care to do so.

Why were we bundling again?

In my estimation there are three historical reasons, and two modern reasons for why we bundle our Javascript.

Wait—what’s bundling?

Tools like Webpack and Rollup take your Javascript and all of its dependencies (like React or lodash), as well as normalized references to external assets (like images) and squish them into one big file, called a “bundle”.

Historical reason 1: the global namespace

Before we started bundling code, we loaded all of our own code as well as external dependencies via script tags. The problem is that all non-ESM Javascript loaded in the browser is loaded into the global namespace. Commonly, library authors would attach themselves to the global namespace under a name they hoped was unique enough not to conflict with your code or other libraries.

You might be old enough to remember writing some code like this:

<head>

<script src="https://code.jquery.com/jquery-3.7.0.slim.min.js"></script>

</head>

<body>

<div id="my-div"></div>

<script>

var myDiv = $("#my-div");

</script>

</body>Where is $ coming from? Well, it’s declared in jquery-3.7.0.slim.min.js, and available everywhere thereafter.

I’m not old enough to remember this…

Good for you.

Above, we’re importing jQuery by adding a script tag to the head of our html pointing at the jQuery CDN.

When the browser encounters this tag, it fetches the jQuery source from the CDN, and loads it all into the global namespace.

jQuery cleverly exposes all of it’s functionality through the global operator $, so that it’s functions don’t collide with any of yours,

or any from other libraries you might be importing.

You yourself had to be careful to not shadow any variable or function names between your own files, or risk unpredictable behavior. In this world, organizing code into multiple files and trying to avoid naming collisions and issues with hoisting is a really bad time.

Bundlers help solve this problem in two ways:

- Allowing you to resolve dependencies in one place, your

package.jsonfile. - Enabling the use of CommonJS-style imports between files or dependencies, making it easier to reason about the dependency graph and lexically scope your code.

Bundlers do this by rewriting all of your code and your dependencies to be independently namespaced,

then replacing calls to require or import with new functions that reference other parts of the bundle.

Show me

Imagine this very complex code that we want to run in the browser:

const { add, subtract } = require("./math");

console.log(add(1, 2));

console.log(subtract(1, 2));function add(a, b) {

return a + b;

}

function subtract(a, b) {

return a - b;

}

module.exports = { add, subtract };Unfortunately, this code is not going to run in the browser as is, since, as we’ve established, CommonJS modules are not supported by any browser. Let’s run it through esbuild and see what we get.

$ esbuild --bundle module.jsOutput

(() => {

var __getOwnPropNames = Object.getOwnPropertyNames;

var __commonJS = (cb, mod) =>

function __require() {

return mod || (0, cb[__getOwnPropNames(cb)[0]])((mod = { exports: {} }).exports, mod), mod.exports;

};

// math.js

var require_math = __commonJS({

"math.js"(exports, module) {

function add2(a, b) {

return a + b;

}

function subtract2(a, b) {

return a - b;

}

module.exports = { add: add2, subtract: subtract2 };

},

});

// module.js

var { add, subtract } = require_math();

console.log(add(1, 2));

console.log(subtract(1, 2));

})();There’s obviously a lot going on there, but the key thing to note is that all of our code is now in one file,

and our require statement has been replaced with a call to a new function, require_math that loads our code from what was previously the standalone file math.js.

All of the code is wrapped in an IIFE (Immediately Invoked Function Expression, pronounced “iffy”) which keeps any of this code from leaking into the global namespace.

Historical reason 2: HTTP/1.x

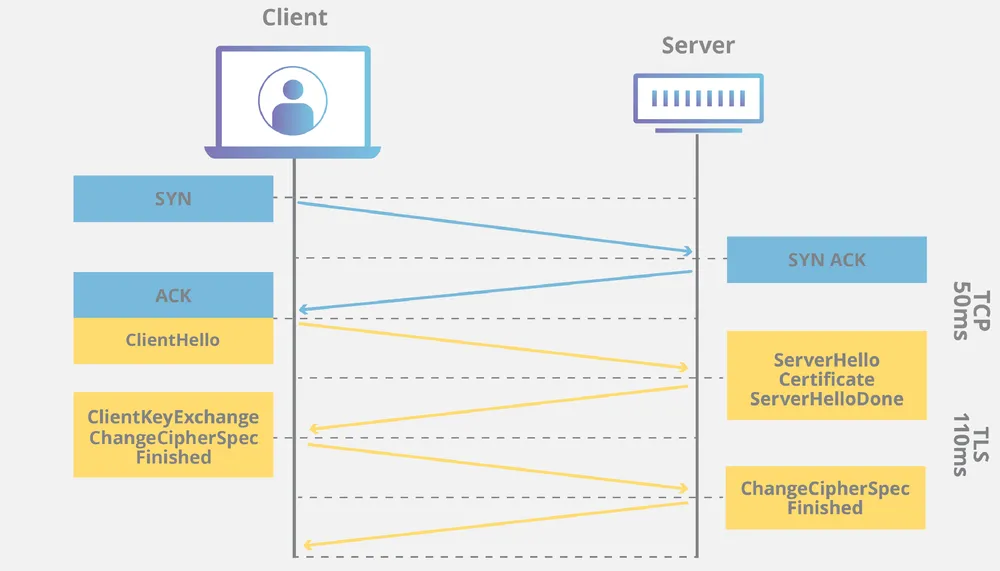

In ancient times (pre-2015), browsers supported only the first major version of the HTTP protocol. Without getting too into the weeds on the nuances of HTTP, suffice it to say that in HTTP/1.x, the relationship between requests and the underlying TCP connection is 1:1. That is, only one resource may be loaded for each TCP connection.

If you’re using TLS, two roundtrips are required to perform a TLS handshake before any HTTP bytes can be transferred. Then, when the request is finished, you have to close this connection and start fresh on the next request.

A TLS 1.2 handshake. Blue is the TCP handshake, yellow is the TLS handshake. Graphic courtesy of the Cloudflare Blog

TLS 1.3

Before I get any neckbeards in my DMs letting me know that fewer roundtrips are required to perform a handshake with TLS 1.3, let me say: while that is true, TLS 1.3 wasn’t released until 2018, well after many websites were already using HTTP/2.

Modern websites (even in 2015) have lots of images and custom fonts and other resources external to the HTML. Because HTTP/1.x forces the browser to close the underlying connection after every HTTP request, you incur 2x more server roundtrips for every additional resource.

Bundling Javascript was a convenient way to reduce the number of requests needed to fetch all of your scripts. Since all your Javascript is now squished into one big file, you’ve eliminated potentially dozens of server roundtrips.

HTTP/2

With HTTP/2, browsers may re-use TCP connections to the same host, and servers on the other end may even eagerly push resources to the client before they’re requested, greatly increasing pageload performance.

HTTP/2 is now supported by 96% of browsers, and we even have HTTP/3 on the way.

Historical reason 3: ES6 support

Until several years ago, not all browsers supported ES6 features we know and rely on, like classes, arrow functions, the const keyword, and too many others to name.

These features are incredible, and make writing Javascript 100x more pleasant. But because ES6 support was slow to roll out, some transpilation of ES6 was required to ensure

your code would work in browsers that did not yet support ES6. This ESX → ES5 transpilation was and remains one of the jobs of the bundler.

Today, 96% of browsers support ES6 features natively without the need for transpilation.

Modern reason 1: Assets

Depending on what your stack looks like, you might need to reference external assets, like images, from your JS. This is also one of the jobs of the bundler. Let’s look at a React example:

import plus from "./plus.svg";

export default function Counter() {

return (

<button>

<img src={plus} />

</button>

);

}When we run this through esbuild, we get the following output, with the svg inlined directly into the bundle as a URL string.

$ esbuild --bundle --loader:.svg=dataurl --jsx=automatic react.jsxOutput

var plus_default =

'data:image/svg+xml,<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 20 20" fill="currentColor" class="w-5 h-5">%0A <path d="M10.75 6.75a.75.75 0 00-1.5 0v2.5h-2.5a.75.75 0 000 1.5h2.5v2.5a.75.75 0 001.5 0v-2.5h2.5a.75.75 0 000-1.5h-2.5v-2.5z" />%0A</svg>%0A';

// react.jsx

var import_jsx_runtime = __toESM(require_jsx_runtime());

function Counter() {

return /* @__PURE__ */ (0, import_jsx_runtime.jsx)("button", {

children: /* @__PURE__ */ (0, import_jsx_runtime.jsx)("img", {

src: plus_default,

}),

});

}Modern reason 2: JSX

If you’re old enough to recognize my jQuery example above, you’re probably also old enough to remember that HTML wasn’t always a regular fixture in our client-side JS. React popularized the concept of HTML-in-JS (known as JSX), and is largely responsible for making it a mainstream part of our dev toolkit.

It’s worth noting that my react.jsx example above won’t run in the browser as is. JSX is not a supported part of the JS spec, the interpreter won’t know what to do with all those angle brackets.

When we transpile that code, the transpiler is ripping out all of that HTML and replacing it with a call to a library function.

Here are the relevant sections again for clarity:

return (

<button>

<img src={plus} />

</button>

);return /* @__PURE__ */ (0, import_jsx_runtime.jsx)("button", {

children: /* @__PURE__ */ (0, import_jsx_runtime.jsx)("img", {

src: plus_default,

}),

});What’s import_jsx_runtime.jsx?

Depending on what specific JSX-focused framework you’re using, your transpiler will use some library function to create or update a DOM element from your JSX tree.

In this example import_jsx_runtime.jsx points to React’s jsx-runtime package. You might also remember React.createElement from older versions of React, or

simply h in Preact.

Transpilation vs Bundling

I think it is easy to conflate the two concepts, as I have above, but I do want to be clear about the difference between transpilation and bundling.

Bundling, again, is simply the process of concatenating JS code from distinct files into one, larger bundle, and inlining external assets into the same.

Transpiling is the process of transforming your code into semantically identical but syntactically different code.

Transpilation might occur because you’re using ES6 features but are targeting browsers that only support ES5, or in this case, because

you want to use JSX instead of writing React.createElement everywhere.

Before I get a howler about having conflated transpilation and bundling in this example, I want to argue that in 2023, many devs would find the distinction to have little difference, as they are rarely used independently. The extent to which the relationship between transpiling and bundling has been conjoined in the JS zeitgeist I think is attributable to the wild popularity of tools like Webpack and Vite, which serve transparently as both transpiler and bundler.

Later, we’ll cover my preferred setup, using esbuild to transpile only, which allows us to use these great client-side frameworks without bundling, and get a lot of free pageload performance as a result.

Bundling can be a performance detractor

As we’ve covered, I think there are many good historical reasons why we’ve bundled our JS in the past, but I think there are some pretty strong reasons to stop bundling JS code.

As we covered above , with the advent of HTTP/2, serving multiple assets from the same server is much more efficient than it used to be. Unless you’re serving literally hundreds of JS files to your client, it’s probably not worth bundling them together. We get a huge bump in pageload performance for free just by using HTTP/2 to efficiently serve resources over one TCP connection.

There are actually some explicit performance benefits to be gained by serving individual ES Modules, the first being:

Cacheability

Browsers aggressively cache assets from pages you visit. If you’ve ever cleared your browser cache, you know that it can balloon in size, often into the gigabytes.

So what is all this stuff? It’s CSS, JS, images, fonts, and a host of other assets that webpages use to display rich content. The browser caches them so that when you visit a webpage a second time, you don’t have to download that stuff again.

Good frameworks will often rewrite the names of your assets to add a content-based “fingerprint” to the filename to help browsers know if they’ve already cached some version of a file. If you change the file in any way, it gets a new fingerprint to let the browser know that it’s a different file.

For example:

Imagine that we have this simple JS file served by our app:

export function getTime() { return new Date() }Most frameworks will give this file a fingerprinted name. Sprockets (the asset pipeline for Rails), will assign this file the name:

time-e96f2a9555d6e40450555a1c2a52da3c59175e85043a59c3f2568a0f35770e51.js

^ -------------- this is the fingerprint ----------------------^Let’s say I get bored with the function keyword:

export const getTime = () => new Date();Guess what? Now it has a new fingerprint:

time-42c814022932fa83186babe7c496f82947d17c415aaa8bbc31b469b91ebdc5cb.jsIn the above example, my change of heart with respect to the function keyword only invalidated 47 bytes of JS. If this had been part of a large bundle including other modules and dependencies, it might have invalidated hundreds of kilobytes or more.

When you serve many small ES modules, the browser only needs to re-download those that have changed since it last saw your webpage. Because you’re very unlikely to touch every file every time you push a new update, you’ll end up serving very little incremental JS to the browser.

JS Parsing

We’ve talked a lot about downloading as a major performance factor for JS, but what the browser does once the JS is downloaded can have a bigger drag on performance.

Once the browser finishes downloading your JS, it has to parse the JS and compile it to run efficiently in the browser before any of the code can be executed . This can be a more expensive process than the download itself.

The above figure demonstrates JS activity by category for a 437kb bundle from factor.fyi . The lion’s share of time, 382ms is due to “scripting” which is any JS activity blocking the main thread. Of this, 119ms is attributable to parsing and compiling.

Compare this with the download time of this bundle at just 75ms.

If your page is heavily dependent on JS for client interactivity, having a large bundle can delay how long it takes for your page to become interactive. Moreover, the ability of popular devices to efficiently parse and compile JS varies wildly. Mobile devices, especially low-cost Android mobile phones, could expect to see parsing and compiling times 3-4x what you would get on higher-end hardware.

While using ES Modules isn’t going to make parsing faster, it provides a built-in mechanism for code-splitting, helping reduce the amount of unused JS on your page that the browser has to parse before your page can become interactive.

My setup

I want to flex share my setup using Rails, esbuild, Typescript and Preact to get dead-simple ES Module support.

Rails Sprockets + ImportMaps

I use Rails and Sprockets to build 99% of my web projects. I’ll save why for another post, but I have a very simple setup for building importmaps in Rails.

# frozen_string_literal: true

require_relative '../../app/lib/import_map'

ImportMap.configure do |map|

# "import" is an external dependency

map.import '@hotwired/stimulus'

map.import '@hotwired/turbo-rails'

map.import 'preact', include_subpackages: true

map.import 'framer-motion', alias: 'react:preact/compat'

map.import 'dayjs', include_subpackages: true

# "module" is a local module

map.module 'dayjs-init'

# "mount_directory" adds all modules from a given dir, optionally recursive

map.mount_directory 'controllers'

end

# This will translate to something like:

=begin

{

"imports": {

"@hotwired/stimulus": "https://esm.sh/@hotwired/stimulus@^3.2.2?",

"@hotwired/turbo-rails": "https://esm.sh/@hotwired/turbo-rails@^7.3.0?",

"preact": "https://esm.sh/preact@^10.15.0?",

"preact/": "https://esm.sh/preact@^10.15.0&/",

"framer-motion": "https://esm.sh/framer-motion@^10.12.18?alias=react%3Apreact%2Fcompat",

"dayjs": "https://esm.sh/dayjs@^1.11.9?",

"dayjs/": "https://esm.sh/dayjs@^1.11.9&/",

"dayjs-init": "/assets/javascript/dayjs-init-ec6c761b40bf461c97802604889b78483fba390ed83fcb83392ec41c42335cd7.js",

"controllers/video-animator": "/assets/javascript/controllers/video-animator-4ad13c3a74253bc208a6d3ec79d8c3d65817f3ecd1de9759f18945c6d413c882.js"

}

}

=end<!DOCTYPE html>

<html>

<head>

<meta name="viewport" content="width=device-width,initial-scale=1">

<%= csrf_meta_tags %>

<script type="importmap">

<%= ImportMap.to_json.html_safe %>

</script>

...class ImportMap

class Configuration

def initialize

@imports = []

@modules = Set.new

end

def import(name, **options)

dep_alias, include_subpackages = options.values_at(:alias, :include_subpackages)

version = package_version(name)

@imports << { name:, version:, remote: remote_specifier(name, version, dep_alias:) }

return unless include_subpackages

slash_name = "#{name}/"

@imports << { name: slash_name, version:, remote: remote_specifier(name, version, dep_alias:, slash: true) }

end

def module(name)

@modules.add(name)

end

def mount_directory(folder, recursive: false)

root_path = Rails.root.join('app/javascript', folder)

globbable = Rails.root.join("#{root_path}/*.{ts,tsx}").to_s

globbable = Rails.root.join("#{root_path}/**/*.{ts,tsx}").to_s if recursive

Rails.root.glob(globbable).map(&:to_s).each do |path|

next if path.include?('.test')

relative_to_root = Pathname.new(path).relative_path_from(root_path).dirname

joinable = [folder, relative_to_root, File.basename(path, File.extname(path))].reject { _1.to_s == '.' }

module_name = File.join(*joinable)

@modules << module_name

end

end

attr_reader :imports, :modules

private

def package_version(package)

package_path = Rails.root.join('package.json')

package_dependencies = JSON.parse(package_path.read)['dependencies']

raise "Package #{package} not found in #{package_path}" unless package_dependencies.key?(package)

package_dependencies[package]

end

def remote_specifier(name, version, slash: false, dep_alias: nil)

base = "https://esm.sh/#{name}@#{version}"

query = { alias: dep_alias }.compact

return "#{base}&#{query.to_query}/" if slash

"#{base}?#{query.to_query}"

end

end

class << self

def config

@config ||= Configuration.new

end

def configure

yield config

end

def to_json(*_args)

all_imports = config.imports.to_h { [_1[:name], _1[:remote]] }

all_components = config.modules.index_with do |mod|

module_specifier(mod)

end

JSON.pretty_generate({ imports: { **all_imports, **all_components } })

end

def module_specifier(name)

return "/assets/javascript/#{name}.js" unless Rails.env.production?

ActionController::Base.helpers.javascript_path("javascript/#{name}")

end

end

endThere are three main things I want to highlight above:

config/initializers/import_map.rbcontains a declaration of all the dependencies I want to be included in my importmap. Any remote dependencies are resolved using esm.sh with the version frompackage.json.- In my application layout, I added an

importmapscript in theheadelement which renders the JSON output ofImportMap. - The

ImportMapclass handles declaring the resolutions for external dependencies, as well as resolving local dependencies from Sprockets.

Why not use importmap-rails?

Well, it famously does not, nor will not , support Typescript. Luckily, it’s not that hard to roll yourself using sprockets and esbuild.

Typescript

I love Typescript, I use it whenever I can. I’ll again save the long-winded extolling of values for another post. One thing to note, however, is that all imports of local modules must be non-relative. Using relative paths in your source code would force you to add a distinct importmap entry for every possible relative path to a module. That would be very annoying to implement so a better approach is to just ensure that you’re always using the same import declaration for a module that appears in your importmap.

Using non-relative paths requires some config changes in Typescript in order for the compiler to successfully type-check your code.

For example:

import type { VNode } from 'preact';

/**

* 'utilities/dollarsigns' is a local module referenced

* with a non-relative path.

* We need to tell typescript how to resolve this non-relative path

*/

import dollarSigns from "utilities/dollarsigns";

export default function PriceLevel(props: { count: number }): VNode {

return (

<p>Price: {dollarSigns(count)}</p>

);

}{

"compilerOptions": {

// ...

// paths helps typescript map a non-relative path to a relative one

"paths": {

"utilities/*": ["./app/javascript/utilities/*"],

}

},

}{

"imports": {

// ...

"utilities/dollarsigns": "/assets/javascript/dollarsigns-abcd0123.js"

}

}esbuild

esbuild is great if you’re like me and prefer very simple, straightforward tools. By those yardsticks, esbuild is fantastic: fast, predictable, and requires limited configuration out of the box. It’s also set up perfectly to transpile your code for ES Modules.

I have a pretty straightforward esbuild setup that globs all of my JS entrypoints and outputs them into a folder where they can be picked up by Sprockets:

import * as esbuild from 'esbuild'

import { glob } from "glob";

(async () => {

// Ignore all test files

const ignore = {

ignored: (p) => /^.*\.test\.tsx?$/.test(p.name),

}

let entryPoints = await glob("./app/javascript/**/*.*", {

ignore

});

// also transpile any js associated with a ViewComponent

entryPoints = entryPoints.concat(await glob("./app/components/**/*.ts", { ignore }));

const buildContext = await esbuild.build({

entryPoints,

bundle: false,

format: "esm", // 👈 this is the key config

outdir: "app/assets/builds", // sprockets will pick up transpiled assets from here

publicPath: "assets",

loader: {

".svg": "dataurl",

},

});

})();With this config, esbuild is doing almost nothing to my source code. With my setup (Typescript and Preact), it’s just stripping types and transpiling JSX. If I weren’t using either Typscript or Preact, I wouldn’t need this step at all.

Show me

Here’s a simple preact component from one of my projects:

import cx from "clsx";

import { VNode } from "preact";

type Props = {

class?: string;

Icon: VNode;

onClick: () => void;

};

export default function IconButton(props: Props): VNode {

return (

<button

onClick={props.onClick}

class={cx(

"flex h-10 w-10 items-center justify-center rounded-full border border-slate-200 bg-white shadow-sm transition hover:border-slate-400 hover:shadow",

props.class

)}

>

{props.Icon}

</button>

);

}import { jsx } from "preact/jsx-runtime";

import cx from "clsx";

function IconButton(props) {

return /* @__PURE__ */ jsx(

"button",

{

onClick: props.onClick,

class: cx(

"flex h-10 w-10 items-center justify-center rounded-full border border-slate-200 bg-white shadow-sm transition hover:border-slate-400 hover:shadow",

props.class

),

children: props.Icon

}

);

}

export {

IconButton as default

};ES Module CDN: esm.sh

Using CDNs to deliver ES Modules to the browser has a bunch of advantages:

- No marginal server load: don’t waste your own CPU cycles delivering someone else’s code.

- Reduced latency: CDN edge nodes are typically going to be a lot closer to your users than your server is.

- No marginal cost: They’re totally free to use.

There are bunch of really great ES Module CDNs out there, but I use esm.sh . esm.sh has some really cool features, like the ability to alias dependencies to support things like preact/compat , and has generally been very solid for my needs.

Server-side rendering

Rails in many ways is the archetypal MVC framework. Server rendering is what it’s designed for and what it’s really, really good at. That being said, if you’re using a client-rendered framework on top of Rails to sprinkle in bits of interactivity, you can end up with some lag in interactivity while your React/Preact components mount. This is a topic for a entirely separate blog post so I’ll cover my setup and a few learnings briefly.

- I try really hard not to have any fully client-rendered pages, especially my landing page. It’s both bad for SEO, being hard for some search engines to crawl, and it adds a ton of wait time for the page to become interactive. We try to sprinkle in rich components among server-rendered markup.

- Use a separate Node server to SSR your Preact components. I’ll write another blog post about this sometime, but essence what we do is use use a ViewComponent to call our Preact SSR server during rendering, which returns HTML that’s sent back to the client, along with some client-side js to hydrate the component if needed.

- If you can’t do the above, try not to use any fully client-rendered components above the fold on your landing page.

Next, Nuxt, and the rest

I don’t have too much experience with these. If they do all of these thing right out of the box, great! Let me know and I’ll add an addendum.

This website was built using Astro which also evangelizes the reactivity-islands approach and does handle all of these ES Module niceties for you.

The net-net

Page performance actually matters, and not just because performance optimzation feels technically satisfying. Page performance has real impacts on people and businesses.

Philosophically, life is short and it’s kinda sad that we’ll spend a nontrival percentage of our lives just waiting for websites to load. Less philosophically, page performance has impacts on all businesses. Better page speeds have impacts across SEO, conversion, and engagement, all of which can result in fewer customers and less money when not optimized.

My point, simply, is this: don’t shoot yourself in the foot. A modern stack with modern, simple tooling can deliver a meaningful bump in pageload performance with very little lift on your part.

🗣️ Send me a howler

Have a comment on this post, something to point out, or just want to say hi? Take a deep breath, count to ten, and then put it in writing to stevendcoffey@gmail.com 😌